- INSTALL APACHE SPARK LOCAL INSTALL

- INSTALL APACHE SPARK LOCAL CODE

- INSTALL APACHE SPARK LOCAL DOWNLOAD

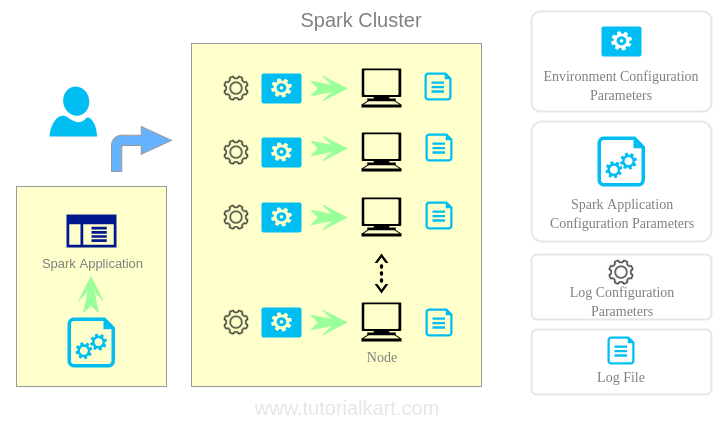

The “election” of the primary master is handled by Zookeeper. We’ll go through a standard configuration which allows the elected Master to spread its jobs on Worker nodes.

INSTALL APACHE SPARK LOCAL INSTALL

This topic will help you install Apache-Spark on your AWS EC2 cluster. Add dependencies to connect Spark and Cassandra Connect via SSH on every node except the node named Zookeeper : If after following all the steps you are getting the following exception Exception in thread "main" : org/jets3t/service/S3ServiceExceptionĭownload jets3t from Maven repository ( ) and copy it to your jars path. We are done! If everything have worked fine, you will be able to run again the spark-submit command with no errors! Extra ball There are many ways for doing it, just use the one that better fits your needs.

INSTALL APACHE SPARK LOCAL CODE

Or include it in your code sc.t("fs.", "my.aws.key")

You can use environment variable export AWS_ACCESS_KEY_ID=my.aws.keyĮxport AWS_SECRET_ACCESS_KEY=my.secret.key You can chose any of the available methods to provide S3 credentials. Step 3: Configure AWS access and secret keys Once downloaded copy it to jars directory.

INSTALL APACHE SPARK LOCAL DOWNLOAD

As you can see in the image.Īs you can see the aws-java-sdk version is 1.7.4, you can download it from. You can get it from the bottom of the page from where you downloaded it. You have to download the exact same version that was used to generate the hadoop-aws package. usr/local/Cellar/apache-spark/2.4.5/libexec/jars) Step 2: Install AWS Java SDK usr/local/Cellar/apache-spark/2.4.5/libexec/jars/hadoop-hdfs-2.7.3.jarĪs you can see the Hadoop version is 2.7.3, so I downloaded the jar from the following URL: Ĭopy the jar to you Spark installation (i.e. usr/local/Cellar/apache-spark/2.4.5/libexec/jars/hadoop-common-2.7.3.jar usr/local/Cellar/apache-spark/2.4.5/libexec/jars/hadoop-client-2.7.3.jar usr/local/Cellar/apache-spark/2.4.5/libexec/jars/hadoop-auth-2.7.3.jar usr/local/Cellar/apache-spark/2.4.5/libexec/jars/hadoop-annotations-2.7.3.jar Since my Spark is installed in /usr/local/Cellar/apache-spark/2.4.5/libexec, I check the version as follows $ ls /usr/local/Cellar/apache-spark/2.4.5/libexec/jars/hadoop-* You can get it from any jar present on you Spark installation. You can also require the packages in your package but usually these packages are already provided by the Spark installation (especially if you are using AWS EMR) Step 1: Download Hadoop AWSĬheck the Hadoop version that you are using currently. You need to include the required dependencies in your Spark installation. Now, if you run the spark-submit command using the default Apache Spark installation you will get the following error : .FileSystem: Provider .s3a.S3AFileSystem could not be instantiated If you don't have Apache Spark installed locally, follow the steps to install Spark on your macOS.įirst of all, you have to get the command to run an Apache Spark task locally, I usually run them using: spark-submit -deploy-mode client -master local -class -name App target/path/to/your.jar argument1 argument2Īnother consideration before we start is to use the correct S3 handler, since there are a few of them that are already deprecated, this guide uses s3a, so make sure that all S3 URL are like s3a://bucket/path.

Apr 22, '20 3 min read Apache Spark, S3, Big data, AWS, Hadoop Running Apache Spark and S3 locally

0 kommentar(er)

0 kommentar(er)